最近简单的研究了一下Spark on K8s,期间遇到了些许问题,在这里总结一下分享给大家。

环境介绍

hadoop 集群:部署在实体机上

Spark : k8s上

需求

要实现的功能是使用spark读取远程hadoop集群上的lzo文件

问题

使用Spark官方提供的DockerFile新建容器是没有问题的,但是由于我的测试环境的数据是lzo压缩文件,导致Spark读取数据时会报本地库的错误:

19/09/20 06:02:46 WARN LzoCompressor: java. lang .UnsatisfiedLinkError: Cannot load liblzo2.so.2 (liblzo2.so.2: cannot open shared object file: No such file or directory)! 19/09/20 06:02:46 ERROR LzoCodec: Failed to load/initialize native-lzo library 19/09/20 06:02:46 ERROR executor : Exception in task 0.0 in stage 0.0 (TID 0) java.lang.RuntimeException: native-lzo library not available at com.hadoop.compression.lzo.LzopCodec.createDecompressor(LzopCodec.java:104) at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:89) at com.hadoop.mapreduce.LzoLineRecordReader.initialize(LzoLineRecordReader.java:104) at org.apache.spark. rdd .NewHadoopRDD$$anon$1.<init>(NewHadoop RDD . scala :168) at org.apache.spark.rdd.NewHadoopRDD.compute(NewHadoopRDD.scala:133) at org.apache.spark.rdd.NewHadoopRDD.compute(NewHadoopRDD.scala:65) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

解决

Spark从2.30版本开始支持运行在k8s容器中,如果Spark运行在k8s上,那么Spark源码中给的Dockerfile中使用的基础源是openjdk:8-alpine,由于使用了alpine,且Dockerfile中没有安装读取lzo等的本地库,因此作业读取lzo文件时失败。

解决方法:

1.alpine中安装lzo依赖

apk add lzo --no-cache

2.在alpine容器中重新编译hadoop-lzo本地库,

下载hadoop-lzo源码:,将其复制到alpine容器中执行:

mvn clean package -Djava.test.skip=true

如果出错,需要安装一下环境:

echo "" > /etc/apk/repositories echo "" >> /etc/apk/repositories echo "" >> /etc/apk/repositories apk update --no-cache apk add gcc --no-cache apk add gcc++ --no-cache apk add lzo --no-cache apk add lzo-dev --no-cache apk add make --no-cache

之后重新编译,编译成功后在target下查找native!

基于alpine v3.9版本编译:

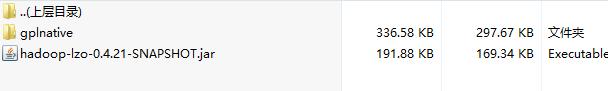

hadoop-lzo.jar依赖包

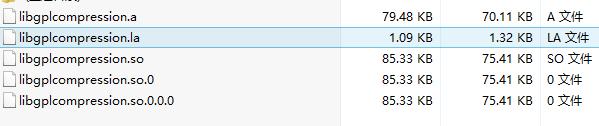

lzo本地库文件:

这几个文件先复制出来,稍后提交作业时会用到。

3.提交时指定lzo本地库和hadoop-lzo.jar依赖包。

下面是修改后的Dockerfile,其中需要hadoop-lzo-0.4.21-SNAPSHOT.jar和hadoop-lzo本地库:

. ├── gplnative │ ├── libgplcompression.a │ ├── libgplcompression.la │ ├── libgplcompression.so │ ├── libgplcompression.so.0 │ └── libgplcompression.so.0.0.0 └── hadoop-lzo-0.4.21-SNAPSHOT.jar

Dockerfile文件修改成如下:

FROM openjdk:8-alpine

ARG spark_jars=jars

ARG gpl_libs=glplib

ARG img_path=kubernetes/dockerfiles

# Before building the docker image, first build and make a Spark distribution following

# the instructions in

# If this docker file is being used in the context of building your images from a Spark

# distribution, the docker build command should be invoked from the top level directory

# of the Spark distribution. E.g.:

# docker build -t spark:latest -f kubernetes/dockerfiles/spark/Dockerfile .

RUN set -ex &&

echo "" > /etc/apk/repositories &&

echo "" >> /etc/apk/repositories &&

echo "" >> /etc/apk/repositories &&

apk upgrade --no-cache &&

apk add --no-cache bash tini libc6-compat &&

apk add --no-cache lzo &&

mkdir -p /opt/spark &&

mkdir -p /opt/spark/work-dir

touch /opt/spark/RELEASE &&

rm / bin /sh &&

ln -sv /bin/bash /bin/sh &&

chgrp root /etc/passwd && chmod ug+rw /etc/passwd

COPY ${spark_jars} /opt/spark/jars

COPY ${gpl_libs}/hadoop-lzo-0.4.21-SNAPSHOT.jar /opt/spark/jars

COPY ${gpl_libs}/gplnative /opt/gplnative

COPY bin /opt/spark/bin

COPY sbin /opt/spark/sbin

COPY conf /opt/spark/conf

COPY ${img_path}/spark/entrypoint.sh /opt/

COPY examples /opt/spark/examples

COPY data /opt/spark/data

ENV SPARK_HOME /opt/spark

WORKDIR /opt/spark/work-dir

ENTRYPOINT [ "/opt/entrypoint.sh" ]

提交代码时加上参数:

--conf spark.executor.extraLibraryPath=/opt/gplnative --conf spark.driver.extraLibraryPath=/opt/gplnative

至此Spark on k8s就可以读取lzo文件了!

喜欢的朋友可以点个关注哦!一起学习一起成长!