前言

又到周五了,现在我们一起来填坑,把之前埋下的雷都给排了~~同时,我们的实战课程也将接近尾声。今天我将带着大家解决上节课留下的问题,还将带大家实现EFK日志方案和Weave监控方案。

每日解析(11.14 每日一题解析)

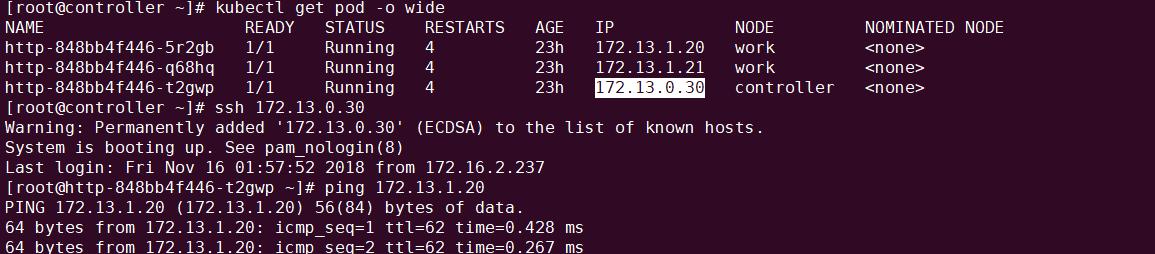

问:k8s 跨主机网络如何实现?你的各个节点是否可以访问各个节点上的Pod,各个Pod直接能否互相访问?

跨主机网络的实现,肯定要使用跨主机网络,常用的跨主机网络模式有Calico、Canal、Flannel、Kube-router。篇幅有限就不一一阐释,只选择Calico和Flannel来解决跨主机网络的bug。

跨主机网络的bug,是指不同节点上的pod之间无法互相访问,按照官方解释按照官方文档配置的跨主机网络是可以实现,Pod和各node之间的通信,Pod和各节点上的Pod之间的通信。但是实际上由于我们的iptable规则,导致不同节点上的pod之间无法互相访问。你没听错,是iptable规则,明明没有设置iptable规则是从哪来的,是kubernetes 跨主机网络模式自己创建的,你即使临时删除了iptable规则,使得各Pod间可以通信,但是一段时间过后,iptable规则又死灰复燃。好了,让我们彻底解决这烦人的iptable规则吧~~

Calico

calico 网络消灭iptable规则引起的不同节点上的pod之间无法互相访问bug,百度谷歌之后发现很多人建议默认选择的 网段 最好不要使用192.168.0.0/16,说是会和自己物理机的网段产生冲突~~,宁可信其有,咋们还是选一个没人使用的网段作为pod的网段吧。例如我选择的是172.13.0.0/16。 除此之外还有很多国内的博主说,docker1.13之后iptable规则做了修改,阻碍了kuberentes pod间的通信,需要开放通信,实际操作了下,完全扯淡,随着时间的推移这个问题早解决了,但是搬运工们不停的搬运,浪费了我很多时间。

上面说的都是废话,其实calico网络,修复不同节点上的pod之间无法互相访问,你只需要禁用ipip模式即可。正式启用了ipip模式才导致你的iptable规则在跨节点pod通信时丢弃信号。

让我们calico.yaml文件

kubeadm init --kubernetes-version v1.12.0 --pod-network-cidr=172.13.0.0/16 # 初始化之后,修改calico.yaml vim calico.yaml ``` - name: CALICO_IPV4POOL_CIDR value: 172.13.0.0/16 - name: CALICO_IPV4POOL_IPIP value: "false" - name: FELIX_IPINIPENABLED value: "false" ``` kubectl apply -f calico.yaml # 如果你的网络已经创建好了,可以动态修改calilo kubectl edit -n kube-system daemonset.extensions/calico-node # 如果你是在vm中进行k8s练习,还是添加禁用swap echo "vm.swappiness=0" >> /etc/sysctl.d/k8s.conf sysctl --system

Flannel

flannel网络消灭iptable规则引起的不同节点上的pod之间无法互相访问bug,百度谷歌之后发现很多人建议直接清除iptable规则即可,唯一需要注意的是每个节点都需要清理~~~

iptables -P INPUT ACCEPT iptables -P FORWARD ACCEPT iptables -F iptables -L -n

现在你的跨节点的pod间可以自由的通信啦~~

EFK

日志进行采集、查询和分析的方案有很多,常见的是ELK,我们之前的教程中有ELK,所以这次我们换成EFK架构。

这里的F是指Filebeat,它是基于原先 logstash-forwarder 的源码改造出来的,无需依赖 Java 环境就能运行,安装包10M不到。如果日志的量很大,Logstash 会遇到资源占用高的问题,为解决这个问题,我们引入了Filebeat。Filebeat 是基于 logstash-forwarder 的源码改造而成,用 Golang 编写,无需依赖 Java 环境,效率高,占用内存和 CPU 比较少,非常适合作为 Agent 跑在服务器上。

创建dashboard用户

serviceaccount.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: dashboard namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: dashboard roleRef: kind: ClusterRole name: view apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: dashboard namespace: kube-system

创建PersistentVolume

创建PersistentVolume用作Elasticsearch存储所用的磁盘

persistentvolume.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: elk-log-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteMany nfs: path: /nfs-share server: 172.16.2.237 readOnly: false

fluentd的配置(configmap)

configmap.yaml

piVersion: v1

kind: ConfigMap

metadata:

name: fluentd-conf

namespace: kube-system

data:

td-agent.conf: |

<match fluent.**>

type null

</match>

# Example:

# {"log":"[info:2016-02-16T16:04:05.930-08:00] Some log text here\n","stream":"stdout","time":"2016-02-17T00:04:05.931087621Z"}

<source>

type tail

path / var /log/containers/*.log

pos_file /var/log/es-containers.log.pos

time_format %Y-%m-%dT%H:%M:%S.%NZ

tag kubernetes.*

format json

read_from_head true

</source>

<filter kubernetes.**>

type kubernetes_metadata

verify_ssl false

</filter>

<source>

type tail

format syslog

path /var/log/messages

pos_file /var/log/messages.pos

tag system

</source>

<match **>

type elasticsearch

user "#{ENV['FLUENT_ELASTICSEARCH_USER']}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD']}"

log_level info

include_tag_key true

host elasticsearch-logging

port 9200

logstash_format true

# Set the chunk limit the same as for fluentd-gcp.

buffer_chunk_limit 2M

# Cap buffer memory usage to 2MiB/chunk * 32 chunks = 64 MiB

buffer_queue_limit 32

flush_interval 5s

# Never wait longer than 5 minutes between retries.

max_retry_wait 30

# Disable the limit on the number of retries (retry forever).

disable_retry_limit

# Use multiple threads for processing.

num_threads 8

</match>

整体配置

logging.yaml

apiVersion: v1 kind: ReplicationController metadata: name: elasticsearch-logging-v1 namespace: kube-system labels: k8s-app: elasticsearch-logging version: v1 kubernetes.io/cluster-service: "true" spec: replicas: 2 selector: k8s-app: elasticsearch-logging version: v1 template: metadata: labels: k8s-app: elasticsearch-logging version: v1 kubernetes.io/cluster-service: "true" spec: serviceAccount: dashboard containers: - image: registry .cn-hangzhou.aliyuncs.com/google-containers/elasticsearch:v2.4.1-1 name: elasticsearch-logging resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP volumeMounts: - name: es-persistent-storage mountPath: /data env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: es-persistent-storage persistentVolumeClaim: claimName: elk-log --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: elk-log namespace: kube-system spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi #selector: # matchLabels: # release: "stable" # matchExpressions: # - {key: environment, operator : In, values: [dev]} --- apiVersion: v1 kind: Service metadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" kubernetes.io/name: "Elasticsearch" spec: ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: fluentd-es-v1.22 namespace: kube-system labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" version: v1.22 spec: template: metadata: labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" version: v1.22 # This annotation ensures that fluentd does not get evicted if the node # supports critical pod annotation based priority scheme. # Note that this does not guarantee admission on the nodes (#40573). annotations: scheduler.alpha.kubernetes.io/critical-pod: '' scheduler.alpha.kubernetes.io/tolerations: '[{"key": "node.alpha.kubernetes.io/ismaster", "effect": "NoSchedule"}]' spec: serviceAccount: dashboard containers: - name: fluentd-es image: registry.cn-hangzhou.aliyuncs.com/google-containers/fluentd-elasticsearch:1.22 command: - '/bin/sh' - '-c' - '/usr/sbin/td-agent 2>&1 >> /var/log/fluentd.log' resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers - name: config-volume mountPath: /etc/td-agent/ readOnly: true #nodeSelector: # alpha.kubernetes.io/fluentd-ds-ready: "true" terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: config-volume configMap: name: fluentd-conf --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kibana-logging namespace: kube-system labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" spec: replicas: 1 selector: matchLabels: k8s-app: kibana-logging template: metadata: labels: k8s-app: kibana-logging spec: containers: - name: kibana-logging image: registry.cn-hangzhou.aliyuncs.com/google-containers/kibana:v4.6.1-1 resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m requests: cpu: 100m env: - name: "ELASTICSEARCH_URL" value: "" #- name: "KIBANA_BASE_URL" # value: "/api/v1/proxy/namespaces/kube-system/services/kibana-logging" ports: - containerPort: 5601 name: ui protocol: TCP --- apiVersion: v1 kind: Service metadata: name: kibana-logging namespace: kube-system labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" kubernetes.io/name: "Kibana" spec: type: NodePort ports: - nodePort: 30005 port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana-logging #type: ClusterIP

执行部署

kubectl apply -f serviceaccount.yaml kubectl apply -f configmap.yaml kubectl apply -f persistentvolume.yaml kubectl apply -f logging.yaml

修复bug

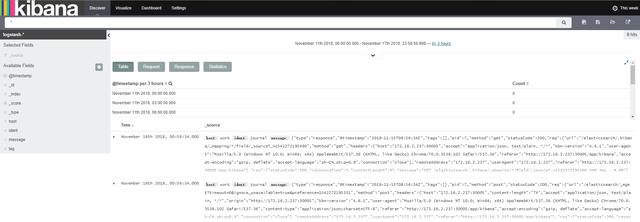

我们打开浏览器,访问,我们得到了如下结果:

{“statusCode”:404,”error”:”Not Found”,”message”:”Not Found”}

这是因为将/api/v1/namespaces/kube-system/services/kibana-logging/proxy/app/kibana这个url path也传递给后面的kibana了,导致kibana却无法处理。 这是由于我们错误配置的env,其实我已经在上面的logging.yaml修复了~~

现在访问

Weave

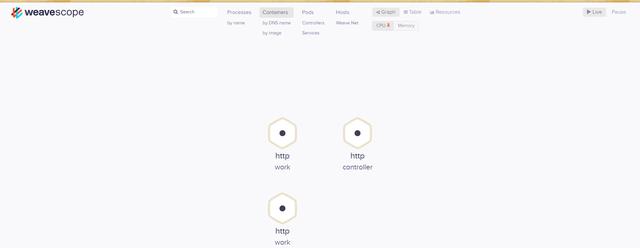

Weave Scope 是 Docker 和 Kubernetes 可视化监控工具。Scope 提供了至上而下的集群基础设施和应用的完整视图,用户可以轻松对分布式的容器化应用进行实时监控和问题诊断。

安装

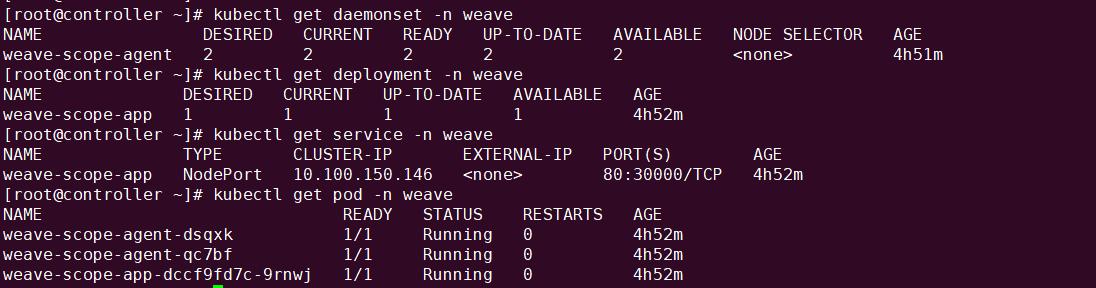

kubectl apply --namespace weave -f " version | base64 | tr -d '\n')" kubectl get service -n weave kubectl edit servie <service_name> -n weave ``` #修改访问方式为nodePort ``` kubectl get pod -n weave

- DaemonSet weave-scope-agent,集群每个节点上都会运行的 scope agent 程序,负责收集数据。

- Deployment weave-scope-app,scope 应用,从 agent 获取数据,通过 Web UI 展示并与用户交互。

- Service weave-scope-app,默认是 ClusterIP 类型,为了方便已通过 kubectl edit 修改为 NodePort。

最后我们直接访问weave ui